MagIC Help Library |

|||

|

|

|

||

1 Introduction

1.1 Preface

The Magnetics Information Consortium (MagIC) initiative has been established to address the need for a public digital archive for the international magnetics research community. As a multi-user facility MagIC provides a place for the community to archive new data as soon after their collection as reasonable, preferably at the time of publication in the peer-reviewed literature. These data are stored in and served from an Oracle 10x database that is part of the overarching online EarthRef.org database, while software tools are provided to help the scientist in preparing data for automated uploading. The MagIC Databases Team already has transferred the data and metadata of existing magnetic databases (GPMDB, PINT, etc.) created under the auspices of IAGA into the MagIC database.

The databases of the Magnetics Information Consortium (MagIC) thus contain user-contributed data associated with paleomagnetic and rock magnetic sources. Data may be part of data published in Earth sciences journals, theses or books. These data are contributed on a Publication-by-Publication basis and in the form of two corresponding MagIC Format ASCII Text (*.txt) and Microsoft Excel© SmartBook (*.xls) files created by the MagIC Console Software. These SmartBook files are uploaded into the MagIC database by using the MagIC Contribution Wizard.

1.1.1 Goals and Philosophy

The overarching goal of the Magnetics Information Consortium (MagIC) is to develop and maintain databases and associated information technology for the international paleomagnetic, geomagnetic and rock magnetic community. MagIC is hosted under the umbrella of EarthRef.org allowing coordination with other information technology initiatives in the Earth sciences, such as GERM, SBN and ERESE. This facilitates interdisciplinary research, by allowing ready access to relevant information in related disciplines. MagIC serves the larger scientific and educational community by making its databases freely accessible and by providing visualization tools designed for users with various levels of expertise.

The PMAG Portal and the RMAG Portal (still under development) form the access points to a new generation of community-based paleomagnetic and rock magnetic databases. These web portals share the same underlying MagIC Data Model, allowing for searches and access to information in both databases. Users can upload their own data (for free) using the standard MagIC Metadata and Data Model available online as long as these uploads are associated with a citable publication and the user have registered. Substantial effort has gone into making the data model flexible enough to accommodate the broad range of data collected in rock and paleomagnetic studies. Where feasible, contributors are encouraged to upload all their measurements and descriptions of lab procedures, in addition to their higher level (published) results. Digital information that does not fit readily into the MagIC always can be uploaded and archived in the ERDA online archive.

MagIC is managed with the help of in particular a Steering Committee and a Metadata and Method Codes Committee that both have a wide representation from the paleomagnetic and rock magnetic research community, nationally and internationally. MagIC also promotes a community dialog on how modern paleo, rock and geomagnetic databases should evolve, and what online tools are needed for data analysis. The dialog includes sponsoring discussions at workshops and promoting special sessions at scientific meetings. MagIC has evolved substantially since its inception at the PMAG Workshop held in March 2002 at Scripps Institution of Oceanography in La Jolla.

From the start MagIC has been incorporating PMAG data from the IAGA databases, namely GPMDB, PINT2003, TRANS, PSVRL and SECVR. However, the new data model allows contributors to archive more information than before, and provides ready access to the data online without the need to resort to commercial database software. RMAG is the first attempt to generate a database of rock magnetic data.

1.1.2 User and Data Policy

All data in the MagIC database are User-Contributed and uploaded on a Publication-by-Publication basis. The MagIC database primarily contains Activated Contributions from Peer-Reviewed Publications and Student Theses, including those In Press. These uploads are available to the entire MagIC userbase and are permanently archived under a strict version control. The various user actions, contribution types, levels of data ownership and data deletions are explained in detail below.

All database contributors and users are to adhere to the EarthRef.org Copyright Policy.

Data sets for publications In Preparation or In Review can be uploaded as Private Contributions Only and can be accessed by the data owners alone. These Private Contributions optionally can be set up by the data owner to have Group Access allowing select groups of users to access such hidden MagIC data sets with a group name and password.

Unpublished Data Sets that cannot be published (elsewhere) in the peer-reviewed literature can be uploaded into the MagIC database as an EarthRef.org Data Publication. These uploads should be accompanied with a list of authors, affiliations, a title, a short abstract and a technical note that describes the analytical methods applied. These publications will be assigned an EarthRef.org DOI and their citation will be permanently stored in the EarthRef.org Reference Database.

Activated Contributions will undergo Data Reviews by editors and referees representing the international paleo- and rock magnetic research community. These Data Reviews are meant to help improve the quality of the data uploads and are not meant to critique the science and conclusions presented in the publications.

Data owners can manage a contribution to the MagIC database in three different ways:

Once a contribution has been uploaded into the MagIC database, the data owner can restrict its access or provide open access to the world, again in three different ways:

There can only be one data owner per contribution. However, data ownership can change based on the following hierarchy:

Activated Contributions cannot be deleted by the data owners. Private Contributions remain in the database until the data owner replaces it with an activated update or activates an existing private upload. If a private contribution has been Inactive for more than 2 years it will be removed from the data holdings by the MagIC Database Team.

The MagIC Database Team reserves the right to delete any Incorrect or Fraudulent Contributions without any further notice.

Each Activated Contribution will be assigned a Data Editor, who will select at least one Referee to review the data contribution for its correctness, completeness and clarity only. These reviews are meant to help improve the quality of the uploaded data in the MagIC Database. However, these reviews explicitly are not concerned with the science and conclusions presented in the publication.

Once the data review(s) are complete, the data owner should prepare an update of the data contribution and upload this as soon as possible into the MagIC Database. All activated versions of these data contributions will be retained in the database, will receive a time stamp and version number, and can be retroactively included in database searches.

1.1.3 MagIC Database Team and Committee Structure

The MagIC Database Team is responsible for the development and maintenance of the MagIC Database and Website. This team exists of the principal investigators, associated researchers, software engineers and undergraduate students.

The Steering Committee is intended to determine the direction to be followed in the development of paleomagnetic and rock magnetic databases, and includes broad international representation from the overall magnetics community as well as people involved in database development in related fields of Earth sciences.

Metadata and Method Codes Committee

The charge for the Metadata and Method Codes Committee is to engage in continuing broad consultation with the magnetics community on the kinds of information that need to be preserved in magnetic databases, and develop an appropriate metadata template for use in paleomagnetic and rock magnetic databases. In particular, it will be necessary to develop metadata structures that can be effectively exploited for existing lines of research while preserving the flexibility for accessing information required to develop new research ideas. The results of such consultation should then be incorporated into a metadata structure, which will be reviewed by the Steering Committee and presented to researchers in the international community for comment prior to finalizing the database structure. This committee will also be in charge of adding and editing the required method codes and controlled vocabularies.

Editorial and Review Committee

The charge for the MagIC Editors is to oversee the review process of all data submissions into the MagIC Database. They will assign each data contribution to any of the potential reviewers that also may include members outside of this committee.

Geological Timescale Committee

The charge for the Geological Timescale Committee is to work towards a nominal timescale to be used in the MagIC Database and will enhance its search capabilities and helps in the interpretation and compilation of disparate data sets.

The charge for the Paleolocations Committee is to work towards a nominal plate motion model through geological time to be used in the MagIC Database and will enhance its search capabilities and helps in the interpretation and compilation of disparate data sets.

1.1.4 History and Timeline

MagIC evolved from the PMAG Workshop held at Scripps Institution of Oceanography in La Jolla, from 24-26 March 2002. The Abstract Volume for this workshop is available online. At this workshop it was agreed that there is a critical need to update and integrate existing magnetic database efforts sponsored by IAGA to take advantage of the technological advances provided by modern web-based data handling capabilities. In September 2002 a small workshop was held at the Institute for Rock Magnetism at the University of Minnesota to discuss the new design of a new rock magnetic database and its integration with the efforts discussed at PMAG2002. A short report is published in EOS and is available online.

Three development stages mark MagIC's short history. In Phase I we focused on an internal review of the MagIC Data Model, the design of the Oracle 10x Database, the design of the SmartBooks, the coding of the MagIC Console Software and extensive testing. Also the PmagPy data analysis software was developed allowing scientists to translate paleomagnetic measurement data into the MagIC format. In Phase II we started to focus on the design and implementation of the Online Drilldown Interface, to populate, maintain and optimize the MagIC Database, and to implement Advanced Visualizations in the MagIC web portals. Currently we are in Phase III whereby we are planning to implement an Editorial and Reviewing System, we will start to use predominantly Flash, Web 2.0, AJAX and XML in our web and database interfaces, and we will start to work toward full Interoperability with other online databases using Webservices.

1.1.5 Acknowledgements

This Help Library is a public domain document to support the Magnetics Information Consortium (MagIC) in its efforts to promote and facilitate an Information Technology (IT) Infrastructure for the international paleomagnetic, rock magnetic and geomagnetic community. This document came together as a result of discussions between the members of the MagIC Steering Committee and MagIC Metadata Committee formed during the first PMAG Workshop held in La Jolla (USA) in March 2002. It is being updated continuously to make certain that all tools and software can be easily used without too much guidance. To view who is involved in the MagIC consortium, please visit the http://earthref.org/MAGIC/whoswho.htm website.

MagIC is supported by NSF Grants EAR03-18672, EAR07-44107 and EAR07-44108.

1.2 Getting Started

1.2.2 Become a Registered EarthRef.org User

To register click on the Register link in the Topmenu of the EarthRef.org website. If you have forgotten your password or username, please go to the http://earthref.org/databases/ERML/ webpage or click on the Forgot Password link in the Topmenu. Twice a year you will be sent a Reminder Email with all registration info and a simple link to edit your user profile.

Registration is required if you want to upload your data into the MagIC database. In return, you will be informed of new features on the website, software updates and other information related to the MagIC initiative through Bi-monthly Newsletters and other email alerts. Searching and downloading data from the database does not require an user name and password.

1.2.3 Reviewer and Editor Responsibilities

A reviewer of a MagIC database contribution is responsible for comparing the uploaded data to the data in the corresponding paper and commenting on any errors or omissions. An online review system is available to help reviewers with this task.

First the reviewer checks the reference to make sure it is correct and complete. Next the reviewer checks the various tables that have been filled out in the smartbook by the contributor. Tables with location data present the reviewer with a map so they can quickly check if the location is correct. Then the reviewer checks the method codes used by the contributor and verifies that they are correct and not missing significant descriptions of the data. Finally the reviewer writes any additional comments to the contributor and marks the review complete.

An editor for the MagIC database is responsible for overseeing the reviewing of new contributions to the MagIC database. The editor will be able to delegate the review process of new contributions to reviewers or review the contributions themselves. Reviews should be done in a timely manner. About a month to review a contribution is an appropriate timeline.

1.2.4 Online Search Features and Data Uploading

Two separate web portals have been developed within MagIC for Paleomagnetism (PMAG) and Rock Magnetism (RMAG). Both interrogate the same underlying MagIC and EarthRef.org databases. This ensures that the search forms for typical paleomagnetic and rock magnetic searches can be kept simple and transparent. However, it does not prevent paleomagnetists from performing rock magnetic queries when searching within the PMAG Web Portal, and vice versa, when starting out from within the RMAG Web Portal. By design each portal is merely a different entry point into the same database.

To search from within the PMAG Web Portal follow the http://earthref.org/MAGIC/search/ link. Here we provide you with search options of location, data type, geological age and reference. You can also do map searches using the Map filter. Each of these searches give you a simple first page from which you can drill down all the way to the measurement level. An advanced search lets you perform more complex searches, including custom Boolean expressions.

Once the RMAG Web Portal is active, to start the RMAG Web Portal follow the http://earthref.org/databases/RMAG/ link. From this web page you can perform simple searches based on experiment type or condition, sample type and reference. Again, in each search you will be allowed to drill down to the measurement level, and an advanced search option lets you perform more demanding search tasks.

The Online Upload Wizard has been developed to help you upload your own data. Uploading can be started by following the http://earthref.org/MAGIC/upload.htm link. The only requirement here is that you are a Registered EarthRef.org User. Uploading data can typically be completed in less than 5 minutes, depending on the number of data records involved. The Online Upload Wizard will ask you to log-in under your EarthRef.org Username and to upload two files (a Microsoft Excel© file and an plain text version of the same file) that were automatically generated by processing your data with the MagIC Console Software. These two files will be archived in the EarthRef.org database while the data and metadata they contain will be parsed into the MagIC database.

This data uploading naturally complements the scientific process of preparing your data for submittal to any Earth science journal. Because you can upload your data into the MagIC database while keeping it Private (as described in the User and Data Policy) you can use all visualization tools on the MagIC website to study and analyze your data, either on their own right or in combination with data already available in the database. You can also make your data Group Accessible by assigning a group name and password that you personally can give out to your colleagues, co-authors and reviewers. All in all, this approach gives you a multitude of flexibility in working with your paleo and rock magnetic data. When you're ready you can Activate your private contributions and make them Publicly Available to other MagIC and EarthRef.org users.

1.2.5 The MagIC Console Software

Getting your data organized, complete and ready to submit for either a paper publication or ingesting into a database is a time-consuming job. We have developed the MagIC Console Software to aid you in your collation of paleo and rock magnetic data and to make the data ready for upload in the MagIC database. Visit http://earthref.org/MAGIC/software.htm to download the Latest Software Version.

1.2.6 Hardware and Software Requirements

Browsers and Screen Resolution

This site will work with all modern browsers, such Microsoft Internet Explorer, Firefox, Safari and Chrome. To use many of the functions on this site, your browser should have JavaScript and Cookies enabled. A minimum screen resolution of at least 1024 x 768 is also recommended.

In order to view PDF (Portable Document Format) files accessible from the EarthRef.org and MagIC websites, you will need Adobe Acrobat Reader© that is available free for download at http://adobe.com. Some downloadable files from the EarthRef.org databases are available as compressed ZIP archives. These archives require the use of WinZip© for Microsoft Windows© users or Aladdin StuffIt Expander© for Macintosh© users to unzip them.

The MagIC Console Software program is coded in Visual Basic for Applications© in Microsoft Excel© and has been tested under Microsoft Windows© 2000/XP/Vista and Macintosh© OS 9.0/X. It is recommended that you run this software on 500 Mhz computers with at least 512 MB of RAM and 1024x768 native screen resolutions. Empty MagIC SmartBooks start at ~200 KB in file size, but increase to several MB's depending on the number of data records included.

1.2.7 Software Updates

Regular Software Updates will be published on the MagIC website. Each update will receive its own Version Number that will be stored in the MagIC SmartBooks as well. If your SmartBooks have an older version number, these files will be automatically updated the next time you open one with the MagIC Console Software. The newest software distributions are always available from http://earthref.org/MAGIC/software.htm. If you use the MagIC Console Software on a computer that is also connected to the Internet and it is outdated, you might be shown a message at start up that a newer version of this software is available for downloading from the MagIC website.

1.2.8 Important MagIC Website Links

1.3 Getting Help

In this Help Libary you will find detailed explanations on what Database Searches can be performed, how to Upload Your Own Data and how to use the MagIC Console Software to prepare your data for uploading. However, you will also find information on the MagIC User and Data Policy, the current Committee Structure, lists with Terminology and Definitions used in MagIC, and so on. Use this library when you run into problems or when you have questions. If you cannot find a good answer, please don't hesitate to Contact Us!

1.3.1 How to Use This Help Library

When using the MagIC Website you can always get help by clicking on the Help Tabs that appear in all the online search forms.

In a similar fashion you can get help by clicking on the Help Buttons (with the big question mark in it) in the MagIC Console Software. All Dialogboxes and Error Messages in this software package will provide you with one of these buttons.

These Help Tabs and Help Buttons will give you context sensitive help for each step in the online search forms or the software you are using. By clicking on these tabs or buttons a new browser window will open in which a particular page from the online MagIC Help Library is loaded. The shown help page explains in some detail what the current page is about, what your options are and what to do in order to proceed. Help subjects also include Error Messages that you may receive when searching on the web or when using the software. Each help item also contains a See also list of related files at the bottom of the page.

If you find that the help pages do not answer your question or help resolve your problem, please click the Feedback link to let us know how we can improve the help files system to serve you better. You can always Contact Us directly via email!

1.3.2 Contacting the MagIC Database Team

Chair of the MagIC Steering Committee

Institute of Geophysics and Planetary Physics

Scripps Institution of Oceanography

University of California, San Diego

La Jolla, CA 92093-0225, USA

1-858-534-3183 (office phone)

1-858-534-5332 (fax)

cconstable@ucsd.edu

EarthRef.org Database Manager and Webmaster

Developer MagIC Software Console

Chair of the MagIC Metadata Committee

College of Oceanic & Atmospheric Sciences

Oregon State University

104 COAS Admin Bldg

Corvallis, OR 97331-5503

1-541-737-5425 (office phone)

1-541-737-2064 (fax)

akoppers@coas.oregonstate.edu

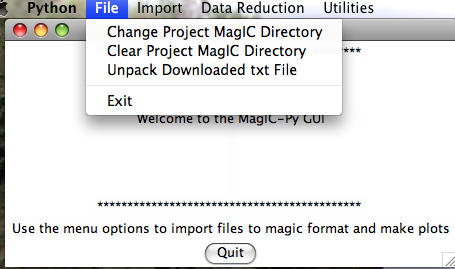

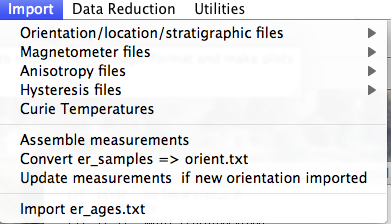

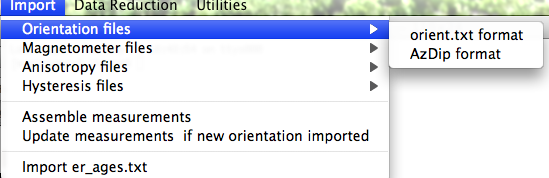

Developer MagIC Python Software

Geological Research Division

Scripps Institution of Oceanography

University of California, San Diego

La Jolla, CA 92093-0220, USA

1-858-534-6084 (office phone)

1-858-534-0784 (fax)

ltauxe@ucsd.edu

2 MagIC Website

2.1 Uploading Data

2.1.1 Using the Contribution Wizard

The MagIC Contribution Wizard is a step-by-step interface to create and update your contributions to the MagIC database. It is intended to be an intuitive tool for you to use.

To proceed, you need to select the appropriate option:

Select the New contribution option if you are not going to update one of your existing contributions, or if this is your first contribution to the MagIC database.

Update an Existing Contribution

When you choose to update an existing contribution, you must upload the ASCII text file (*.txt) and Microsoft Excel file (*.xls) associated with the contribution. In most cases, an update will be necessary if some of the information in the existing contribution has been changed, or information has been added. You will be given a list of your previous contributions on the Reload Previous Contribution page. Select the contribution you wish to edit.

Changes will take effect immediately upon completion of the contribution process, and will be available for users to view. If your contribution is listed as In Progress on the Reload Previous Contribution page, it is not yet available for users to view, since the final confirmation page was not reached on your previous contribution visit. You must complete the entire process in order for your contribution to be available for users to see.

The Reload Previous Contribution form allows you to select one of your existing contributions to update.

A list of your existing contributions is given, with the most recently updated or created first. Contributions are grouped five per page, so if you have made more than five contributions, you will need to use the page navigation links at the bottom of the form to locate the desired contribution.

Each contribution is listed with the date contributed or updated, along with a unique identifier string and the contribution's status. A status of Completed indicates that you completed the entire process for that contribution, and it is currently available for users to view in search results. In Progress indicates that some steps in the process have not yet been completed for that contribution, so in order for users to view it, it must be completed. Updated indicates that the contribution was completed at some point, and that you have subsequently updated some of its data.

Other information is provided to help you differentiate your contributions. Each citation to which the contribution is linked is listed in the standard citation format, including its author(s), year published, title, and journal and DOI information, if applicable.

Activate a Private Contribution

If this is your first contribution to MagIC, you will only be able to select the New contribution option.

All steps in the contribution process, along with the final confirmation step, must be completed in order for your contribution to be made available to users of the database. Once your contribution is in the database, you may update it at any time. If you do not complete your contribution, it will be removed from the database and you will need to upload it again.

In order to make a contribution, you must first log in. If you do not yet have a username and password, select the "login" option at the top right of your main browser window. From there, you may create a profile, username, and password.

Select the New contribution option if you are not going to update one of your existing contributions, or if this is your first contribution to the MagIC database.

When you choose to update an existing contribution, you must upload the ASCII text file (*.txt) and Microsoft Excel file (*.xls) associated with the contribution. In most cases, an update will be necessary if some of the information in the existing contribution has been changed, or information has been added. You will be given a list of your previous contributions on the Reload Previous Contribution page. Select the contribution you wish to edit.

Changes will take effect immediately upon completion of the contribution process, and will be available for users to view. If your contribution is listed as In Progress on the Reload Previous Contribution page, it is not yet available for users to view, since the final confirmation page was not reached on your previous contribution visit. You must complete the entire process in order for your contribution to be available for users to see.

2.1.2 Logging In

You must be logged in to make or update a contribution to MagIC. If you are not currently logged in, you will be automatically taken to the User Authentication screen, where you can enter your username and password. From there, you will be taken to the next step in the contribution process.

If you have not yet created a profile, you may follow the appropriate link from the User Authentication screen or click on the Register tab in the main browser window. This will take you to a simple form to enter your contact information and to create a username and password. The identification of contributors and their addresses is an integral part of scholarly data archiving and this information is continuously updated in the EarthRef.org address register. EarthRef.org does not distribute address information to third parties. You may read the entire EarthRef Privacy Policy by clicking on the Disclaimer link from the home page.

Some of the contact information given in your profile will be displayed when a user views your contributions, and from Mailing List searches.

2.1.3 Uploading Files

Two files must be uploaded for each contribution, and both are output from the MagIC Console Software. One file is a Microsoft Excel (*.xls) file, which contains a spreadsheet for each table in the database into which your data will be inserted. The other is an ASCII text (*.txt) file containing information that the upload programs need to be able to add your information to the database.

Uploading a file is accomplished in the standard fashion through your browser. Clicking the Browse... button will cause a dialog box to display, allowing you to browse for the desired file on your local computer or network. Once the file is selected, the file name will be automatically filled in beneath the upload field.

If either of the required files is missing from the upload, you will get an error message asking you to upload both files. In addition, if one of the files has become corrupted and can not be read, your upload will not be possible and you will receive an error message.

After clicking the Continue button, you will immediately see a progress bar showing the status of your upload. Because MagIC contribution files may contain several thousands of records and may therefore be very large, the file upload may take a moment. Please do not click the Continue button a second time since this will force the upload procedure to begin again and will delay your upload.

Upon successful upload, you will automatically be taken to the next step in the contribution process.

2.1.4 Selecting Main Reference ''This Study'' for Your Contribution

Upon successful file upload, you must first verify the main reference to which your contribution will be linked. If an exact match to the reference is found in the database (either by DOI or author list, year, and title), or the Contribution Wizard did not find any similar matches, you will see a screen like the one below in which one reference is listed. You need only click the Continue button to verify that this is the correct reference.

2.1.5 Selecting Additional References

After you have verified the main reference (This study) to which your contribution will be linked, the Contribution Wizard will process all other reference information included in your uploaded files. As with the main reference, the Wizard will attempt to find exact matches to the references.

If all references are found in the database (either by DOI or author list, year, and title) and no errors are found, you will not need to verify any information. Instead, you will automatically be taken to the next step. If neither an exact match nor similar matches are found in the database, the Contribution Wizard will insert the new reference.

If, however, the Contribution Wizard can not find an exact match but finds similar references, you will see it listed with one or more possible existing database matches, followed by the reference in your contribution. In most cases, there may only be a slight spelling or other variation between the existing reference and the one in your contribution, and you will need to verify that the highlighted reference is indeed the correct one to which your contribution should be linked. The Contribution Wizard tries to find the best match to your contribution's reference, but you must confirm the reference before proceeding.

It is always best to select the existing database reference, even if you find slight variations in the spelling. This is because other data in the database may already be linked to it, and the existing reference data will most likely be much more complete than the reference in the contribution file. You may contact EarthRef.org to update the variation in the database. If you choose to add the new reference instead of using the existing one, the reference data that you provided in the MagIC software will be inserted into the database.

Occasionally, there is some data missing from the uploaded file because it has not been completed in the MagIC software prior to the creation of the upload files. In this case, you will see any problem references listed at the bottom of this screen to alert you to the fact that these references will not be matched to your contribution. It is best in this case to refer to your data in the MagIC program and to complete it as best you can. Once fixed, you may return to the Contribution Wizard to update the contribution. You may, however, choose to continue without correcting the references and update the contribution at a later time. Until then, your contribution will not be linked to the problem references.

2.1.6 Selecting Mailinglist Entries

Your data for people associated with the contribution you are uploading will be inserted into the EarthRef.org address book. The Contribution Wizard will perform a check to see whether these individuals already exist in the database. If a match is found for each individual, or no similar entries already exist, the mailinglist data will be automatically processed and you will not see this step.

If, however, an exact match can not be found for one or more individuals, and one or more similar entries are found, you will be asked to select the best match for each individual. As with the reference data, the difference between the information in your contribution and that in the database may be a simple spelling difference. If that is the case, it is always best to select the existing entry, as its data will be more complete, and it may already be linked to other data in the database. You must select the most appropriate entry and click the Continue button to process these records.

2.1.7 Data Parsing

Most of the data in your contribution (aside from reference data) will be processed in the Data step of the Contribution Wizard. In most cases, this will consist of inserting thousands of records into many database tables, which can take several minutes.

So that you will know where in the data processing stage the Contribution Wizard is, you will see a status bar like the one in the screen below. For each table in your contribution files, you will see a status bar indicating the current record being processed. The status bar image illustrates visually where each record lies, and below the bar is a numeric indication of the record.

If an error is found during the data processing, the processing will not continue. You will see a message indicating what the problem is so that you may fix it in your MagIC program data. Once fixed, you may return to the Contribution Wizard to update your contribution.

2.1.8 Activating Your Contribution

After processing is complete, you will see a confirmation message stating the number of seconds processing took, along with instructions for activating your contribution. Please note that even though your data has been inserted into the database, you must verify that you want to activate it by checking the checkbox on this screen. This is done to allow you to decide when your contribution will be activated. If, for example, an error was found at some point in the contribution process, or you decide you would like to update some of the data and re-upload the files, you may choose to wait until you have the updated data files to activate your contribution.

Activate Contribution with Global Access

If you continue without selecting the option to confirm the activation, your contribution will remain in the database temporarily, but will be unavailable for users to see. It will be removed from the database within 48 hours and it will not appear in your list of existing contributions.

If you have checked the "Activate this contribution" checkbox on the previous screen, your contribution will be activated in this step, making your data available to users. You will again see a status bar indicating which table is currently being activated. The process will take only a few seconds.

Keeping Contribution Private with Group Access

In this final step, a confirmation e-mail will also be sent to the e-mail address provided in your user profile, and will include a link to a summary page of your contribution data, a link to the main citation referred to in your contribution, and a reminder of your username and password.

Once activation has completed, you will see a confirmation message. At this time, your the contribution process is complete, and your data is available for users to see.

2.1.9 Summary Page

If you did not select the "Activate this contribution" checkbox on the previous page, your contribution will not be activated in this step, and instead of a status bar you will see a message alerting you to this fact. If you forgot to check the checkbox, simply click the Back button to return to the previous step, check the checkbox, and click the Continue button. The process will then proceed as described above.

2.2 Searching the Web Portals

Finding, collecting and downloading paleomagnetic and rock magnetic data from the MagIC Database is important for most users. In this Chapter we explain the different search functionalities you can find on the MagIC Home Page and in its Search Portals. For more detailed information on searching we refer to the Online MagIC Help Library at http://earthref.org/MAGIC/help.htm.

2.2.1 MagIC Website Home Page

Start by visiting the http://earthref.org/MAGIC home page, from where you can launch the PMAG and RMAG search portals, manage your own contributions, find important web links, a list of the most recent contributions, and read the latest news about paleo and rock magnetism.

2.2.2 The PMAG Portal

The PMAG and RMAG web portals form separate entry points for paleo and rock magnetic searching. Although you can carry out different kind of searching, both portals interrogate the same underlying EarthRef.org and MagIC Databases. For this reason, you can find rock magnetic data when searching in the PMAG Portal and paleomagnetic data when searching in the RMAG Portal. The portals merely are different starting points that are tailored to the needs of either magnetic community.

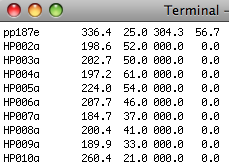

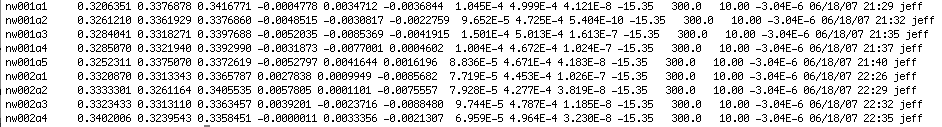

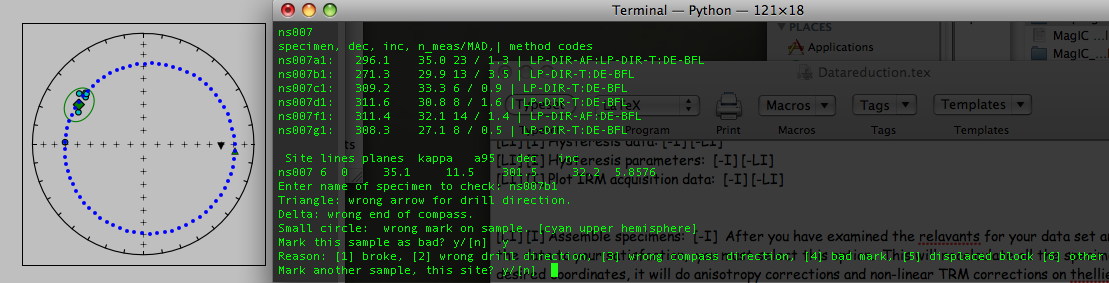

Below, we show you the resulting Measurement List from a particular drill down search in the PMAG Portal. As can been seen from this example, we drilled down from McMurdo to the actual measurements on Specimen mc01a. In this view of the data you can link back to the Tauxe et al. 2004 paper in the EarthRef.org Reference Database, you can use the links in the upper left of this listing to navigate back through your drill down history, and you can use the Save, Plot and Options pull down menus for more advanced functionality.

Note that the Units of the data are given between brackets in the Header of this table. All units are SI based, except for some fields, such as geological age. If you mouse over the headers, a longer explanation is given in a popup balloon. If you mouse over any of the cells in the table, a similar popup balloon is shown that contains the header information, as well as the basic information that is displayed in the left most column (experiment info in this example). This functionality will help you to better navigate larger data tables or when you show the table in Expanded View (see below).

In the above example only data from the Basic View is shown. You can select the Expanded View from the links to the upper left. If you do so, all available data for each particular measurement are shown. In the near future you will have the ability to set your own Search Preferences, in which you can predefine what columns are shown in both the Basic and Expanded Views, and in what units you want to view your search results. For example, you will be able to set your preferences to always show ages in Ma and paleointensities in µT instead of the default T.

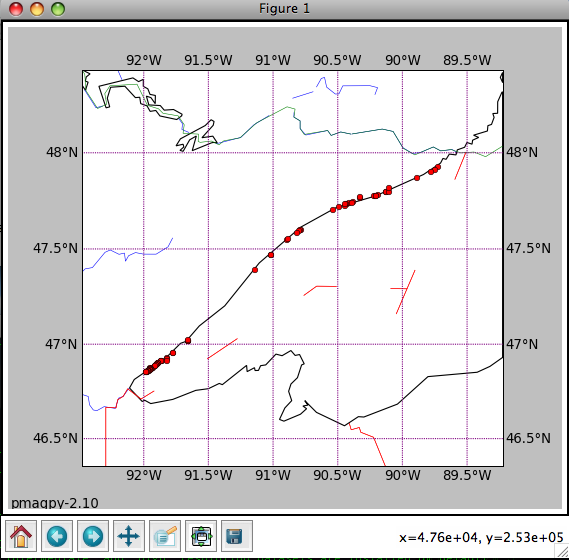

In the PMAG Portal one can search by location, which allows you to drill down from any location to the actual measurement data. You can do so by providing a lat-lon box, keywords to find a certain location by name, the last name of the first author, or a combination of these searches. You can also perform a map search by clicking on the Map Search button.

Searches by reference will provide you with a Detailed Reference Information table (see below) for a given publication. From this table you can view and download various Background Data Files, including the Microsoft Excel© and text versions of the MagIC SmartBooks. This table also provides Quick Links to the MagIC and other EarthRef.org databases, when these exist. In addition, a link may appear to the Provider of the publication, which redirects you to the Full Length HTML version of the paper on the publishers website. From here you can download a PDF of the publication, provided you or your institution has access to the journal or publication in question.

In the PMAG Portal you can also perform a more advanced search, which allows you to combine various Boolean searches terms. These terms normally are an accumulation of all other standard searches. You can also perform a map search by clicking on the Map Search button.

2.2.4 Plotting and Visualizations

Many Plotting and Visualization options are available through the Plot menu. In the plots and maps that you can generate at the MagIC Website all data from a single results table are included. If a results page contains more than one table, you can always Group Together the tables and make a new plot with all combined data. All plots can be saved as PNG (image) or SVG (scalable vector graphics).

These plotting tools will be expanded considerably in the future, allowing different kinds of experiments and data to be plotted, such as Rock Magnetic measurement plots (hysteresis loops, FORC diagrams, etc) and Stratigraphic Section and Drill Core plots. The ultimate goal is to make these plots and maps of good publication quality, to deliver them as SVG (already available), and to make the plotting more interactive so that you can access the results of different averaging scheme's or data selections.

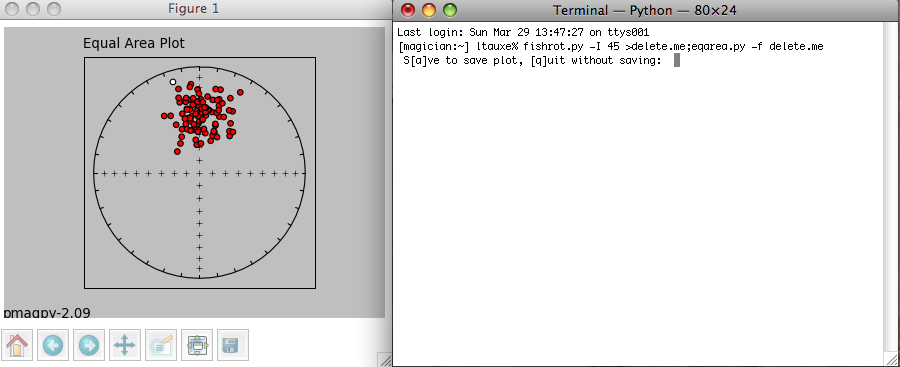

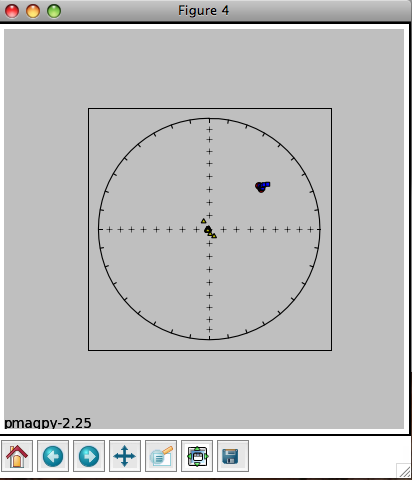

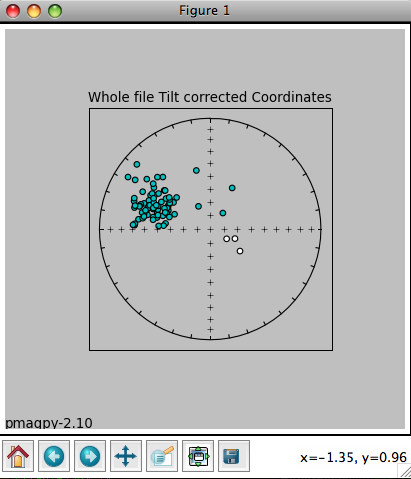

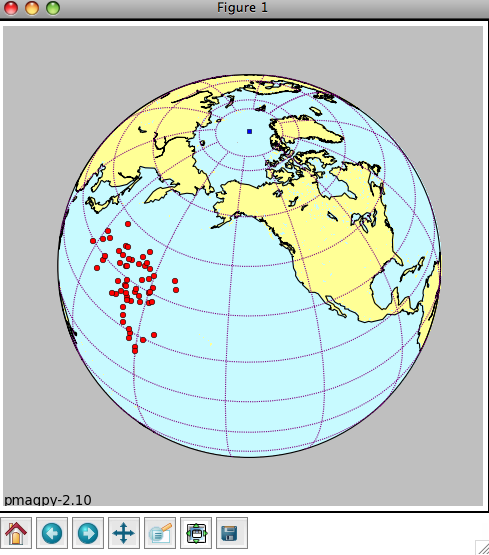

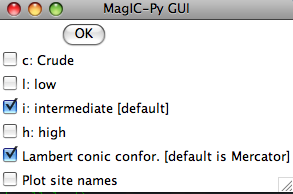

Global Maps and Orthographic Projections

On the Location, Site, Sample, Specimen and Measurement levels you can generate maps that plot their latitude-longitude positions. Global Maps (see the image above) are available as well as Orthographic Projections (below) in order to view data around the poles. Both map views are combined with an Equal Area plot.

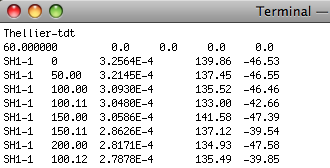

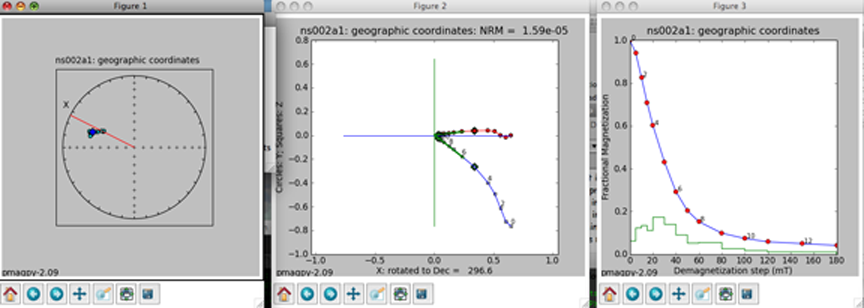

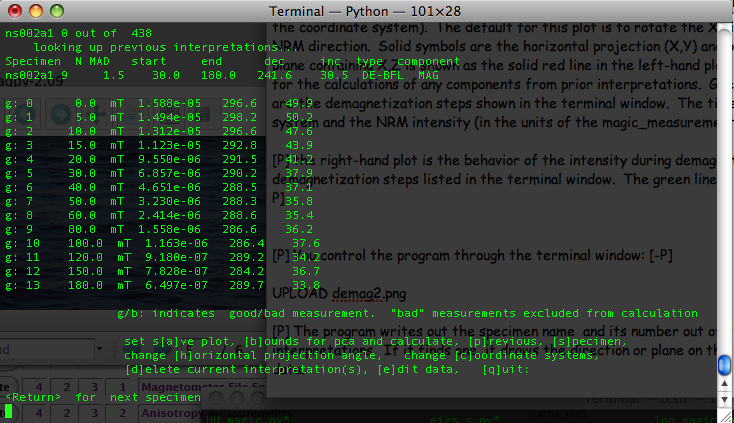

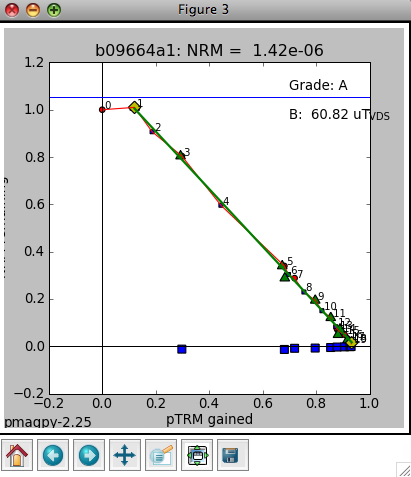

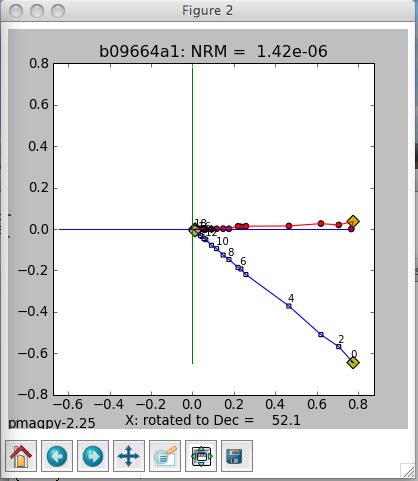

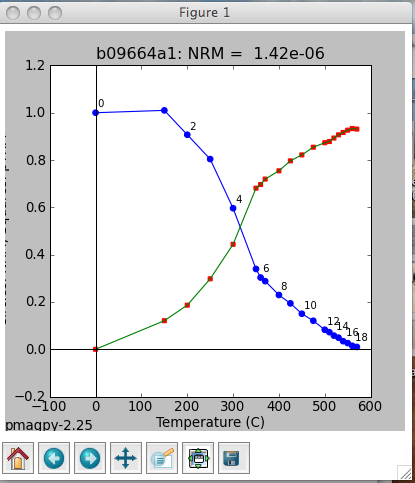

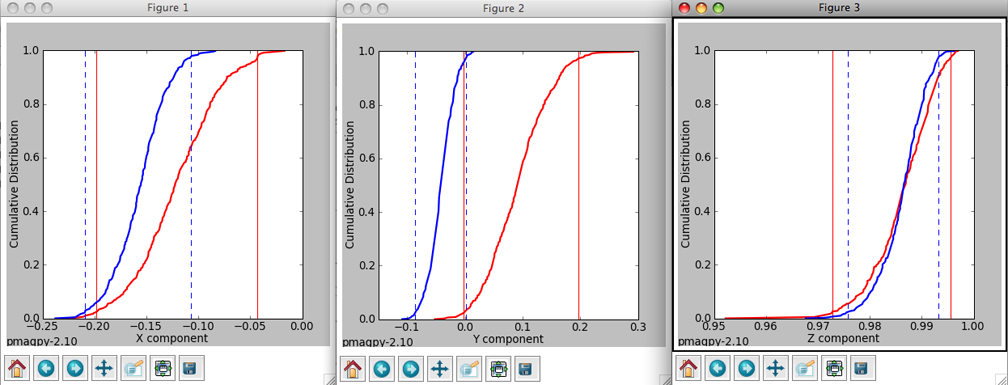

On the Measurement level you can plot various other diagrams, such as typical Zijderveld, Thellier and Arai plots. These plots (see below and next page for some examples) are drawn from the data residing in the database and provide a good view of the data itself and the quality of the experiments carried out.

2.3 Error Messages

2.3.1 Invalid File Type

The error "Invalid file type" will appear if one or both of the files uploaded are incorrect. The most common reason for this is inserting the Microsoft Excel file (*.xls) in the ASCII Text file (*.txt) upload field and vice versa. Simply click the Back button and insert the correct file into each field.

If you are certain that the correct file is in each upload field, a more serious issue may be the cause for this error. One of your files may be corrupt or can otherwise not be read correctly by the Contribution Wizard. In this case, it is suggested that you contact the webmaster for assistance.

2.3.2 Unable to Move File to Directory

The error "Unable to move [file] to [directory]" is a serious but uncommon error that may occur during contribution upload. It is a problem on the server side, and is not a consequence of a user action or corrupt file. When this error occurs, the webmaster is immediately notified, and the problem will be resolved. It is suggested that you contact the webmaster and you will be notified when the problem has been corrected.

2.3.3 Unable to Find File

The error "Unable to find [file]" occurs during data parsing when data is expected for a particular table but is not included in your contribution. The most common cause for this error is that it was not included in your input into the MagIC Console software. It is recommended that you return to the Console to complete your data input and then return to the Contribution Wizard.

If you are certain that the data input into the MagIC Console software is complete, please contact the webmaster with a description of the problem.

2.3.4 Missing Data for Reference

The error "Missing data for [reference]" occurs during the reference step when some data for a particular reference is incomplete. The missing data will be described with the error message. The most common cause for this error is that it was not included in your input into the MagIC Console software. It is recommended that you return to the Console to complete your data input and then return to the Contribution Wizard.

If you are certain that the data input into the MagIC Console software is complete, please contact the webmaster with a description of the problem.

3 MagIC Console Software

Getting your data organized, complete and ready to submit for a paper publication or ingesting into a database is a time-consuming job. We have developed the MagIC Console Software to aid you in your collation of paleomagnetic and rock magnetic data and to make the data ready for uploading in the MagIC database.

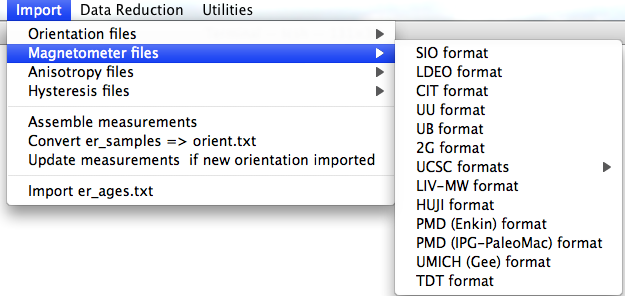

3.1 Introduction to the Standardized MagIC Data Format

Establishing an integrated paleomagnetic and rock magnetic database is difficult as we need to move large quantities of data into the MagIC database from both legacy and new studies. To address this challenge we have chosen an approach that makes the archiving of these data Synchronous with the Scientific Publication process and is entirely based on User Contributions. Around the time of publication each scientist has all relevant data for a publication at his fingertips and knows best how to deal with it. In fact, he probably went through a sustained effort to collect all measurement data (and most of the relevant metadata) to perform his or her scientific research. The MagIC Console Software is developed to aid the scientist to collate all information at this opportune time and to do so by making use of a Standardized MagIC Data Format.

3.1.1 What is a SmartBook?

We wish to tap into this process by supplying scientists with a tool that they can use to collect all data that are relevant to one particular publication (henceforth referred to as a project). Key to this approach is a standard Data and Metadata Template in the form of an Microsoft Excel© SmartBook in which they can enter their data and in which they can further process their data to eventually upload into the online MagIC database.

Because we use this standardized SmartBook protocols can be established around which scientists can build (or adapt) their (current) laboratory protocols. For example, they can streamline the collection of measurement data by enabling the export of standard MagIC Format Text Files that can be readily imported into the MagIC SmartBooks. Similar export functions can be established for various data reduction software and geomagnetic modeling codes. This approach significantly increases the flow of magnetic data into the MagIC database and circumvents labor-intensive data entry for individual scientists.

The MagIC SmartBook has been defined in a way so that it can store all measurements and their derived properties for studies of paleomagnetic directions and intensities, and rock magnetic experiments, such as hysteresis, remanence, susceptibility and anisotropy. The basic design of this SmartBook focuses on the work-flow in typical paleomagnetic and rock magnetic studies. This ensures that individual data points can be traced between actual measurements and their related specimens, samples, sites, locations, and so forth.

To make working with the MagIC SmartBooks more straightforward, we have developed the MagIC Console Software that, in addition to many other functions, contains an Import function for standard text files. This software helps you to Enter Data, it helps you to check the Correctness and Coherence of the data entries, and it helps you Prepare for Uploading your SmartBook in the MagIC database. Since the MagIC SmartBooks have been developed as Microsoft Excel© Files, most users will feel comfortable with the setup, which makes use of standard toolbars and dialogboxes.

3.1.2 Structure of the MagIC SmartBooks

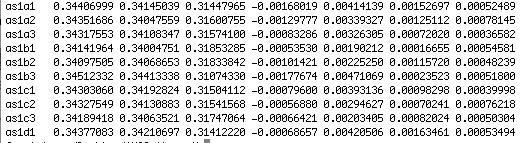

The MagIC SmartBooks are standard Microsoft Excel© Workbooks that contain predefined tables stored in separate worksheets. There is an obvious hierarchy in these tables, where the EarthRef (ER) tables are the most general and are applied to other databases hosted under this umbrella website as well.

The four MagIC tables (measurements, methods, instruments and calibrations) are less general, but contain data and metadata that are common for typical paleomagnetic and rock magnetic projects. The PMAG and RMAG tables contain the most specialized, highly derived data.

3.1.3 MagIC Table Layout

Each table is divided into several pre-titled columns. The first Five rows of each column characterize the data to be entered below the headings. The first row gives the Label of the column and describes the data to be entered (explanation in plain English). The second row displays the Oracle Variable Name associated to these data in the relational database and used by the MagIC Console Software. The third row indicates the Data Type and maximum length of text strings. The fourth row shows the expected Unit of the data. The fifth row indicate the Status of the data to show whether the data is required, recommended or optional. All five heading rows are fixed in the MagIC Data and Metadata definition, which is strictly maintained and versioned.

Upon opening a SmartBook in the MagIC Console Software they will be used to check the validity of the tables and columns residing in the Microsoft Excel© File.

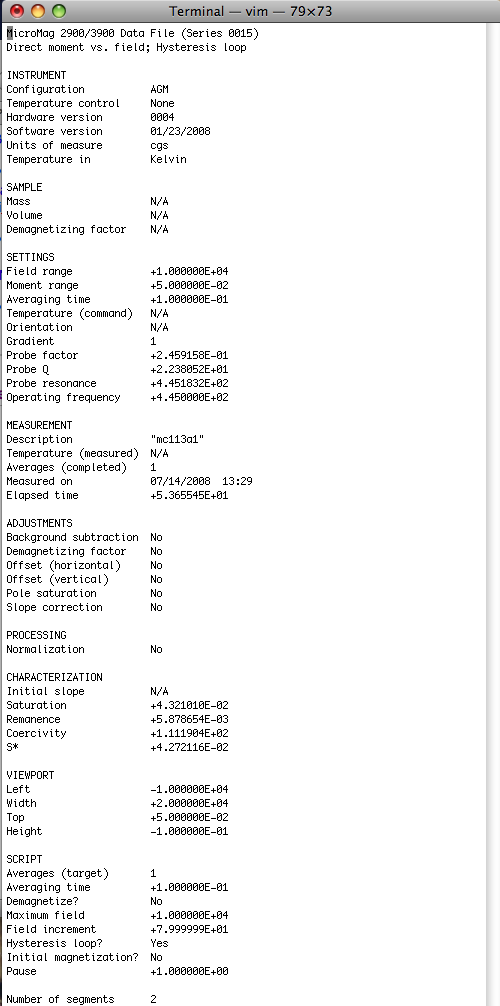

3.1.4 Standard MagIC Text File Format

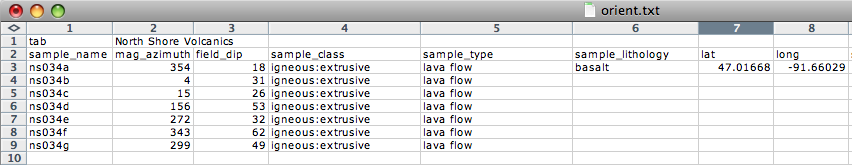

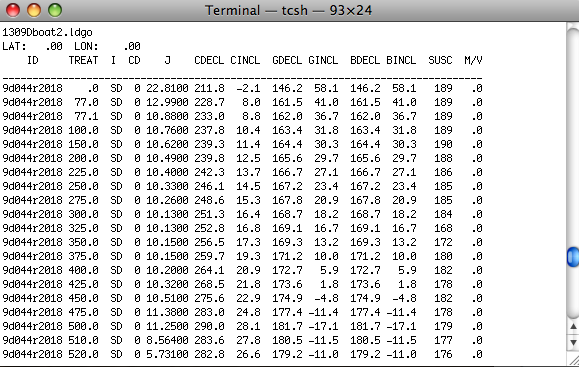

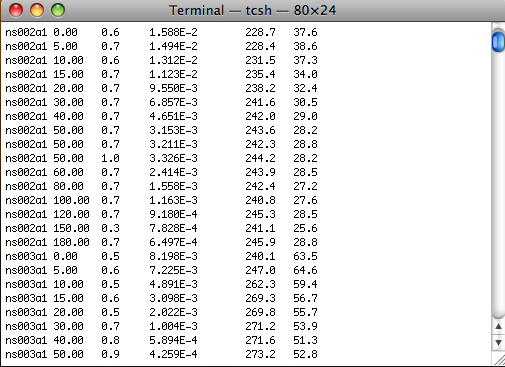

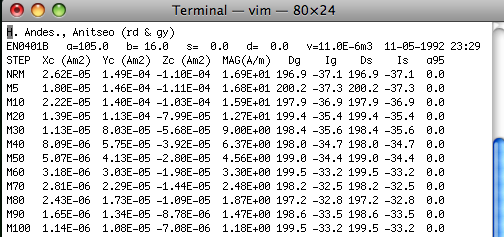

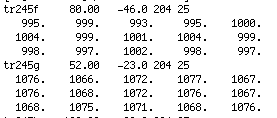

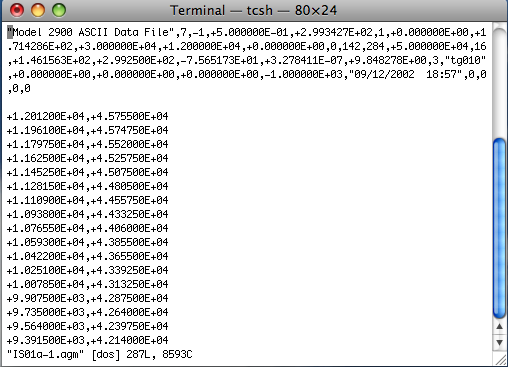

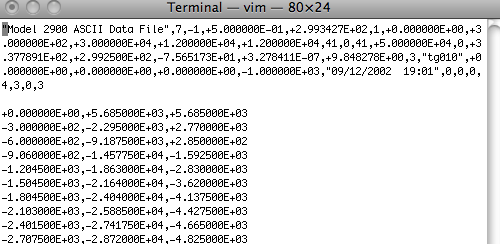

The MagIC Console Software has the ability to export and import Flat Text Files. To be able to easily transfer data between outside software packages, data collection computers and the MagIC SmartBooks, we have developed the Standard MagIC Text File Format. Experience shows that this is the most efficient way when dealing with large data sets. It allows you to quickly import these data into the MagIC SmartBooks without making mistakes. In the example below, we show how this text file is based on the layout of the table displayed above.

The Standard MagIC Text File Format starts out with two header lines (red fonts) indicating the delimiter used, table name and column names. Below these header lines, the data appears in the same order as indicated by the column names (black fonts).

Possible delimiters include tab and pipe symbols. Note that single or double quotes around text strings are not required. You can also store multiple data blocks (for one project) in one text file, where each data block is stored according to the above rules but is separated by the standard >>>>>>>>>> divider. Since each block has its own header lines, in principle, you can store the results from different experiments or tables in one and the same Standard MagIC Text File. This will result in a text file that may look as follows.

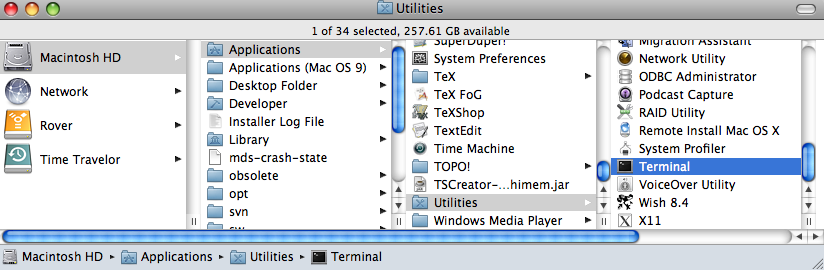

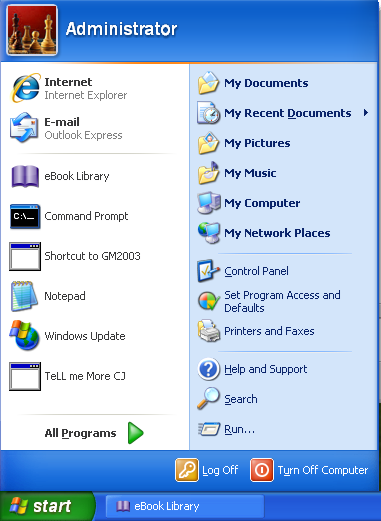

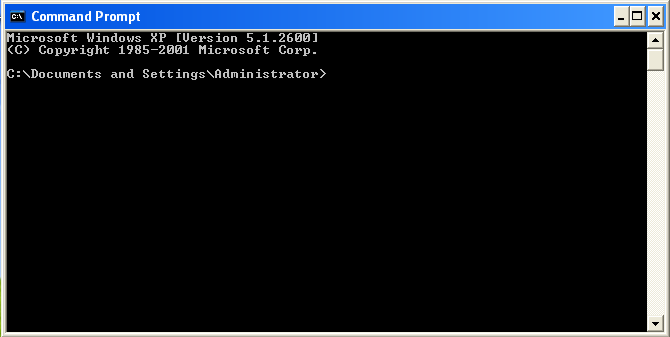

3.1.5 Software Installation

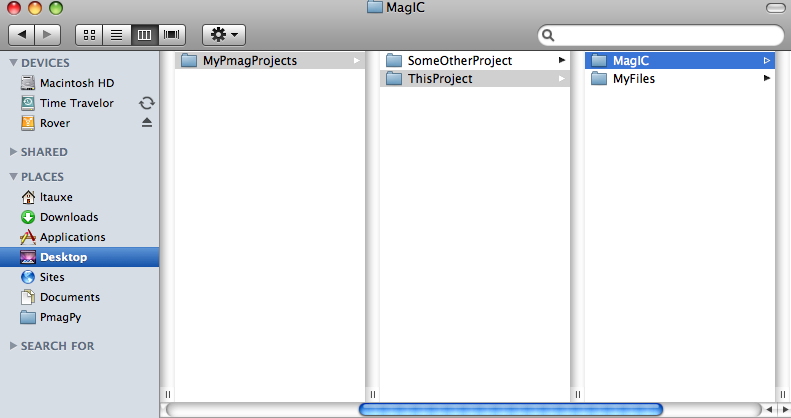

The MagIC Console Software does not work with a fixed directory structure. However, we recommend that you generate a main MagIC directory on your hard disk and that you store the populated SmartBook for each of your MagIC Projects in sub directories.

In the table below we have listed Four files that are required for a proper functioning of the MagIC Console Software. Note that each file has been named according to its current version, that is v20 or v24 (instead of vXX) for example.

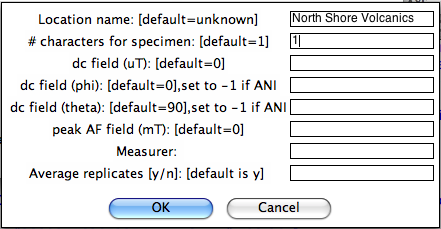

3.2 Entering New Data

While populating the MagIC SmartBooks there are several possible approaches to put your paleomagnetic and rock magnetic data into these Microsoft Excel© Files. Here we will provide a guided explanation on how to populate the MagIC SmartBooks most effectively, using a top-down approach, where first the high-level data and metadata are entered, followed by more detailed magnetic measurement and derived data later on.

Note that this an example only that allows you to get familiar with the capabilities of and functions available in the MagIC Console Software. There may be better ways to go about this for certain special cases or data sets, as described elsewhere in this help library.

3.2.1 Overview of the Data Population Process

We start out by giving an overview of the MagIC Data Population process, which can be divided into four different parts. We will follow this general overview by a Step-by-Step Guided Explanation of the process.

Starting a New MagIC SmartBook

Entering the High Level Metadata

Entering and Importing PMAG and RMAG Data

The above 15 steps will be discussed in detail in the Step-by-Step Guided Explanation in the next help topic. Remember, however, that this is a generalized approach. Some steps may not be required (and thus can be skipped) because they are not relevant to your project. Therefore, it is especially important to always run the MagIC Wizard at the beginning of your data population session.

3.2.2 Step-by-Step Guided Explanation

1. Start the MagIC Console Software

2. Generate a New SmartBook File

4. Add Your Current Project Citation in the ER_citations Table (This Study)

5. Compile Methods Definitions for this Project

6. Compile Instrument Definitions for this Project

7. Add other Citations to ER_citations table

8. Add all Mail Addresses to ER_mailinglist Table

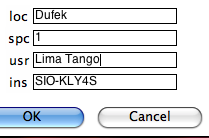

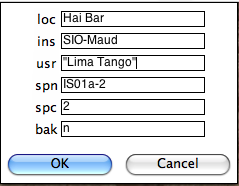

9. Define the Data and Metadata in the General EarthRef [ER] Tables

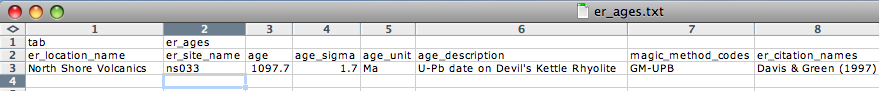

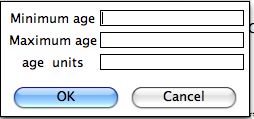

10. Add Age Data to the ER_ages Table

11. Add Measurement Data in the MAGIC_measurements table

12. Add Paleomagnetic and Rock Magnetic Data

15. Upload your SmartBook into the MagIC Online Database

3.3 Special Cases

There may be a few cases that require a special approach while populating the MagIC SmartBooks. In this Chapter we treat these special cases by briefly describing the issue and a possible solution.

3.3.1 When Requiring New Instrument Codes

If you cannot find the appropriate Instrument Code in the software, you can always create a new code by activating the MAGIC_instruments table and by clicking the Edit Data button on the MagIC Toolbar. Continue by clicking the New Record button and fill out all fields. After uploading your files this new code will be reviewed and, if this new code is acceptable, it will be added to the Controlled Vocabulary listing of instruments.

3.3.2 When Entering More Than 65,000 Measurement Data Records

The user cannot add more than 65,000 data records in a single table due to the limitations of the Microsoft Excel© worksheets. However, there is one exception made for the records of the MAGIC_measurements table. In this table you can import as many measurements as required. If the software detects more than 65,000 records, it will automatically generate a table named MAGIC_measurements2, and so on.

3.3.3 When Starting Data Population from Measurement Files

It may be quite common that you start data population with a measurement file, which has been stored in the Standard MagIC Text File Format. When pursuing this route, first import all these text files into a SmartBook that have been prepared by applying the first six steps described of a typical file upload procedure. Follow this by running the Synchronize Names function from the Special menu to pre-fill the names of all Locations, Sites, Samples and Specimens (and others) throughout the tables of the SmartBook. Now you only have to complete these tables with some extra data and metadata.

When new records are added to these tables using the Synchronize Names function, they will appear in highlighted "light blue" and "pink" cells for easy detection. The originating data records are highlighted "purple" as well. You can remove the formatting again by pressing Ctrl Shift S or it will automatically be removed when you run the Prepare for Uploading function.

3.4 Examples

In this Chapter we will present examples of a few common tasks you most likely will perform during the uploading of your data. The examples are meant to give you a step-by-step visual summary of what you will have to do to complete these tasks.

3.4.1 Entering References in the ER_citations Table

In every SmartBook you have to enter the bibliographic information for your current contribution to the MagIC Database. You may also need to add references for publications from which you have been compiling additional data. Typically you would follow the next sequence of actions ...

3.5 Function by Function

3.5.1 Operations Menu

To open a new MagIC SmartBook file, choose the New File ... command (or ctrl-N) in the Operations menu. In a dialogbox you will be asked to Save the SmartBook File under your name of choice. The NewFile.xls is a default name that you can overwrite, as necessary. When clicking on the Save button, a new and empty MagIC SmartBook is created with 30 predefined tables (worksheets).

To open a MagIC SmartBook, choose the Open Existing File ... command (or ctrl-O) in the Operations menu. In a dialogbox you will be asked to browse and select the SmartBook file you would like to re-open in the console software.

Select the Close ... or the Close All ... commands in the Operations menu to close the active MagIC SmartBook file(s). Upon closing the console software will ask you to save the file(s) first.

Click the Save ... command (or ctrl-S) in the Operations menu to save the active MagIC SmartBook file. This command has the same functionality as the Save button on the left-hand side of the toolbar itself. Clicking Ctrl Shift and the Save button [Windows platform only] will save the SmartBook file after cleaning up and reformatting all tables.

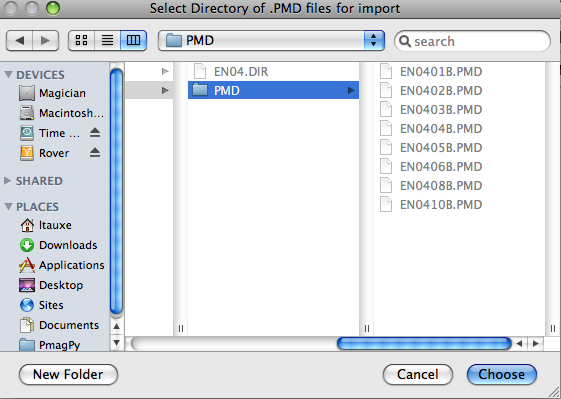

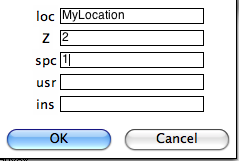

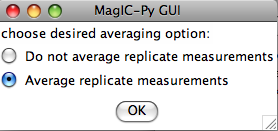

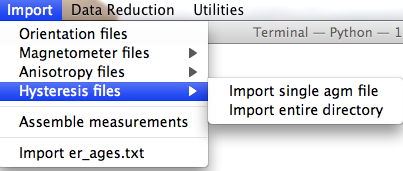

Click the Import Data Files ... command (or ctrl-M) in the Operations menu to import Standard MagIC Text Files with data to include in the SmartBook tables. An Import Data Files dialogbox appears in which you can browse for these standard text files. You can select multiple text files by holding down the Ctrl or Shift keys [Windows platform only]. When clicking on the Open button, the selected file(s) will be opened and the data will be imported into the MagIC SmartBook tables.

If the data in the Standard MagIC Text File to import don't have the expected format, an error message will appear. Note that data in a column with a misspelled Oracle Name will be ignored while importing the data. The software will give you an error message.

Click the Export command (or ctrl-E) in the Operations menu to export Standard MagIC Text Files. You will be asked to export data for the current (active) table only, or to export all tables in the entire MagIC SmartBook file. All data will be exported into a single tab delimited text file with your chosen file name.

To automatically Import data and immediately Prepare for Uploading (see above) choose the Batch Processing ... command from the Operations menu. You will be asked to select one or more Standard MagIC Text File(s) [multiple files are only allowed on the Windows platform] after which the data processing starts without any user interaction. If errors occur during the five Data Checks they will not cause a prompt, but instead they will be written to an Error Log. This is an efficient option if you prepare Standard MagIC Text Files outside of the MagIC Console Software and you only want to pass your data through the console to make them ready for uploading. Since all checks get performed without any interrupting for your entire data contribution, the error logs give you a good overview where you should improve on your data and metadata collection.

When all data have been entered in the MagIC SmartBook choose the Prepare for Uploading command (or ctrl-F) in the Operations menu. You will be asked for be asked for a confirmation. If you click the Yes button, five Data Checks will first be performed before the data is saved in a single tab delimited text file. This may take several minutes, depending on the complexity and the amount of data stored in the SmartBook. Clicking the No button will cancel this action.

The five Data Checks include a SmartBook integrity check, a check for orphaned data, a general data check, a specific data check (for longitudes, citation style, data ranges, etc.) and an integrity check for all related data fields. If one of these checks returns an error, the Prepare for Uploading command will ask you to edit (or add) certain data and metadata. If you do not edit the particular field at this stage, you will cancel the Prepare for Uploading action.

After the succesfull completion of the Prepare for Uploading command you are ready to upload your data on the MagIC Website. Goto http://earthref.org/MAGIC/upload.htm or click on the Upload Into MagIC Database command in the Operations menu.

3.5.2 Special Menu

The widths of all columns in a table may be automatically adjusted to Autofit the five header rows using the Autofit Columns command in the Special menu.

To clear all the data in a table (worksheet) choose the Clear Table command in the Special menu. You will be asked for a confirmation.

Add and Remove Method Definitions ...

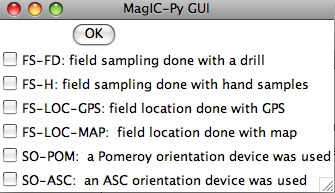

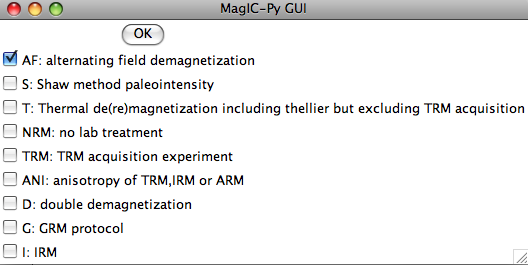

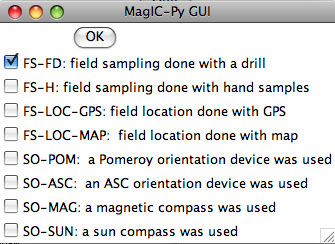

Each MagIC Project only requires a limited number of Method Definitions. By clicking on the Add and Remove Method Definitions ... command in the Special menu you can generate a list of Method Codes that describe the methods used in your project, including field sampling techniques, lab protocols, parameter estimations, and more (see the buttons at the top of this dialogbox for a complete list of categories). The Method Codes that are currently stored in your MagIC SmartBook file are listed in the In Use ... list box shown on the right-hand side.

You can Add new Method Codes by first selecting a method from the master listing of methods list box on the left-hand side, followed by clicking the right-pointing Double Arrow button. Show different categories of the Method Codes in this master listing by clicking on the Category Buttons in the top of this dialogbox.

You can Remove the Method Codes one-by-one by selecting the code in the In Use ... list box, followed by clicking the other left-pointing Double Arrow button. You can remove all methods at once by clicking on the Clear Selection(s) button. The program will ask you for a confirmation.

When you are finished compiling your list of Method Codes, click the Save button. This action will store all newly assigned Method Codes in the MAGIC_methods table, and it will add the appropriate references to the ER_citations table.

Add and Remove Instrument Definitions ...

Each MagIC Project only requires a limited number of Instrument Definitions. By clicking on the Add and Remove Instrument Definitions ... command in the Special menu you can generate a list of Instrument Codes that describes the instruments used in your project. The Instrument Codes that are currently stored in your MagIC SmartBook file are listed in the In Use ... list box shown on the right-hand side. The Instrument Codes should all start with an abbreviation indicating the host institution.

You can Add new Instruments by first selecting an instrument from the master listing of instruments list box on the left-hand side, followed by clicking the right-pointing Double Arrow button. You can search for Instruments by typing in (part of) a name in the top textbox, followed by clicking on the Find ... button.

You can Remove the Instruments one-by-one by selecting the code in the In Use ... list box, followed by clicking the left-pointing Double Arrow button. You can remove all instruments at once by clicking on the Clear Selection(s) button. The program will ask you for a confirmation.

When you are finished compiling your list of Instruments, click the Save button. This action will store all newly assigned Instrument Codes in the MAGIC_instruments table, and it will add the appropriate references to the ER_citations table.

Some names, such as Location, Site, Sample and Specimen names appear in more than one place in the MagIC SmartBooks. Use the Synchronize Names function in the Special menu to automatically pre-fill these names throughout all the tables. This requires that you have at least filled out these names in one of the SmartBook tables. When new records are added to these tables they will appear in highlighted "light blue", "pink" and "purple" cells for easy detection. You can remove the formatting again by pressing Ctrl Shift S or when you Prepare for Uploading.

You can detect and remove Duplicate Data Records in your tables by choosing Remove Duplicate Data Records from the Special menu. It is recommended that you first Highlight duplicate records. If you select this option, the potential duplicates get highlighted with an "orange" color. If you opt to Remove the duplicates immediately, the duplicate record(s) will be deleted from you table instead, while the first instance will be retained. Note that this action cannot be undone.

You can detect and remove Empty Data Records by choosing Remove Empty Data Records from the Special menu. You will be asked to remove empty data record for the current (active) table only, or to do so for all tables in the entire MagIC SmartBook file. Note that this action cannot be undone.

Combine Two Similar Data Records

In some cases, tables may have sets of data records that are very similar, where they for example have the same sample number, but one data record (i.e. row) contains inclination and declination data, and another one only paleointensity data. These data records could be combined (i.e. merged) into one record. In order to do so, select two similar data records in a table and choose Combine Two Similar Data Records from the Special menu. If there are no data conflicts, the rows will be combined on the first row and the second row gets deleted. If the data cannot be combined, an error message is displayed and the data conflicts are highlighted in "orange" colors. To select two rows in your table, push the control key and click on any cell to select the first and second row. Note that this action cannot be undone.

To verify that the data in one table have the expected format, choose the Check Data Records command in the Special menu. You will be asked to check data for the current (active) table only, or to check all tables in the entire MagIC SmartBook file.

You can also verify whether specific data (like latitudes, longitudes, citations, magnetic moments, paleointensities, flags) have the expected format and range of values. Some automatic repairs will be performed in the background (e.g. recasting longitudes from the -180/180 to the 0/360 notation) during this data check, other checks may return error messages. To perform this function, choose the Check Specific Data Records command in the Special menu. You will be asked to check data for the current (active) table only, or to check all tables in the entire MagIC SmartBook file.

To validate whether data in one table are correctly related with other data tables in the SmartBook file, choose the Check Data Integrity command in the Special menu. You will be asked to check data for the current (active) table only, or to check all tables in the entire file. This action will search each table for existing relations and it will check them against their parent tables. If a relation is broken (i.e. the parent record does not exist, or you misspelled the daughter or parent record) then an error will occur. You will be asked to edit certain data and metadata.

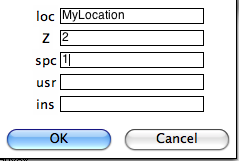

3.5.3 Data Entry and Editing Dialogbox

There are various ways to enter your data in the MagIC SmartBooks. One of these methods is the Edit Data option (or Ctrl Shift D) that you can find on the main MagIC Toolbar. In this dialogbox you can enter, edit and delete data on a record-by-record basis. If you want to edit an existing data record, first select the data record you want to edit by selecting any cell in its row in the Microsoft Excel© table, followed by clicking on the Edit Data button. You can also Double-Click on the cell. The complete record will be loaded in the dialogbox and will be ready for editing. Use the numbered Tabs on the upper left-hand side to show additional fields, if more than 15 data fields are available for a record.

Note that you can edit all fields, but your changes will only be applied to the MagIC SmartBook when you click the Close button, when you move through your records by using the Next and Previous buttons, or when clicking the New Record button. Temporary changes can be undone by Canceling the dialogbox.

To add a new record click the New Record button. With this action a new record is inserted at the bottom of the active table.

To delete the currently loaded data record from the MagIC SmartBook table click on the Delete button. You will be asked for a confirmation. Deletions cannot be undone.

To move between adjacent data records click on the Previous or Next buttons. You can also make use of the Alt P and Alt N shortcut keys for faster navigation. Note that each data record preloaded in the Edit Data dialogbox is checked automatically, when you move to the next or previous data record, or when closing the dialogbox.

If you checked the Prefill Records checkbox, this new record will be pre-filled with the data from the currently-selected data record in the active table. Use this functionality when adding data records that have the majority of their data field entries in common. This might significantly improve the speed of data entry for larger data sets.

Tab Navigation and Hot Buttons

To facilitate the efficient editing of your data in the Edit Data dialogbox, you can make use of the colored Hot Buttons on the right-hand side of this dialogbox, which will open Pop-Up dialogboxes (see below for two examples). These Hot Buttons can be divided into 3 different categories:

The Purple Buttons will provide you with a larger edit box to enter Long Text Entries.

Lists and Controlled Vocabularies

The Light Green and Light Blue Buttons provide you with Shortlists to select a single predefined item or multiple items (see example below). In case of the Light Blue Buttons your selection will create links towards other data tables in the MagIC SmartBook file. To select multiple items simply click on as many records in these lists as required.

Dark Blue Buttons help you enter names of Authors and Editors in the ER_citations table in the proper format, as expected for the MagIC and EarthRef.org databases. Use the add, edit and delete buttons to enter the correct author and editor information. These Dark Blue Buttons also give interactive dialogboxes to allow you to enter multiple keywords, ocean names, country names, etc.

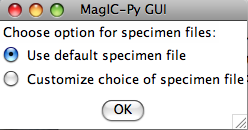

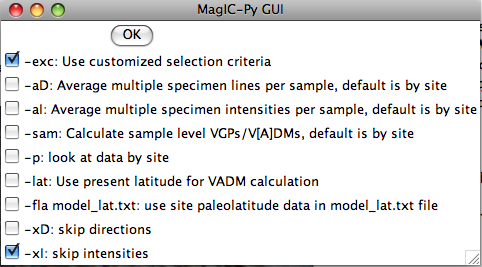

3.5.4 MagIC Wizard

Depending on the type and characteristics of a study, certain fields in the MagIC SmartBook tables may not be relevant and should be hidden. The MagIC Wizard can be launched from the MagIC Toolbar (or Ctrl Shift W) and allows you to customize the SmartBook in three steps. In effect, this tool will temporarily hide some tables and columns from view in Microsoft Excel© depending on the options you selected. Note that the selections made in the Wizard are only applied after clicking the Finish button.

In each of the three wizard steps (see next page an example) you can Check or Uncheck the checkboxes that are relevant to your study. As you will see, these options go from general in the first step to detailed in the following steps. While unchecking these checkboxes, the wizard may uncheck other checkboxes by default. You can always recheck these checkboxes again, if you require that data to appear in your MagIC SmartBook file.

Show All and Clear All Buttons

You can show all fields again by clicking the Show All button in the Wizard, followed by clicking the Finish button. Alternatively, you can click the Clear All button to uncheck all checkboxes.

You can also use Predefined Settings to more easily apply the needed settings. When you click the Use Predefined Settings ... button, another dialogbox appears with options like Classical Directional Study, Classical Intensity Study, Modern Paleomagnetic Study, Modern Stratigraphic or Drill Core Study, and so on.

3.5.5 Navigating a SmartBook

The data in each table may be sorted in an ascending order using up to three columns as keys. To sort on only one column, select any cell in this column and click on the Sort button. To select two or three columns for sorting, push the control key and click on any cell to select the first, second and third column, and then click on the Sort button. The order in which you selected the sorting columns also determines the order in the sorting keys. Note that this action cannot be undone.

With this button you can move to the First Column in the active table without scrolling vertically. You can also use Ctrl Shift Home on your keyboard.

To move multiple columns use the left and right buttons on the toolbar, or hold down the Ctrl Shift keys while using the Arrow Keys on your keyboard. This will allow you to move from one set of columns that are visible in the active Microsoft Excel© window to the next (or previous) set, showing you at least one or two columns overlap. These actions do not cause any vertical scrolling.

With this button you can move to the Last Column in the active table without scrolling vertically. You can also use Ctrl Shift End on your keyboard.

Use this menu to navigate between different Tables in the active MagIC SmartBook file. This pull down menu will be automatically updated every time you switch between different SmartBooks using the SmartBooks toolbar menu (see below).

You can switch between different MagIC SmartBook files using the SmartBooks toolbar menu. If you activate another file through this menu the Tables menu (see above) will be automatically updated.

3.5.6 Text Tools

Select the cells to which you want to add some text or which ones you which to remove or overwrite with some other text, in batch mode. Select the Add Text Tool option from the Text Tools menu. In this tool first type the Text to add ... and then select the Action you want to perform on the right hand side. Apply these settings by clicking on the Start button. Note that this function cannot be undone.

Select the cells on which you want to perform some simple conversions. Then select the Number Conversion Tool option from the Text Tools menu. Type in the Conversion value ... and select one of the actions on the right hand side. Click on the Start button to start applying the conversions. You only can perform one operation at a time.

Select the cells from which you want to remove all spaces. Select the Remove All Spaces option from the Text Tools menu. Note that this function cannot be undone.

Select the cells from which you want to remove extra spaces only. Select the Remove Extra Spaces Only option from the Text Tools menu. This function will remove all Double Spaces and any existent Leading and Trailing Spaces. Note that this function cannot be undone.

Select the cells from which you want to remove all characters with the exception of numbers. Select the Remove All Characters option from the Text Tools menu. This function will remove all Non-Numeric Characters, except for the "-" (minus) and "." (period) symbols, so that it leaves only numeric values. It will also remove all Spaces. Note that this function cannot be undone.

Remove Parentheses and Brackets

Select the cells from which you want to remove all parentheses and brackets. Select the Remove Parentheses and Brackets option from the Text Tools menu. This function will remove all the "( )", "[ ]" and "{ }" symbols. Note that this function cannot be undone.

Select the cells from which you want to remove strange symbols. Select the Remove Strange Symbols option from the Text Tools menu. This function will remove the "~!@#$%^&*_`'|<>? =" symbols and the "tab" character. Note that this function cannot be undone.

Select the cells from which you want to remove line feeds and carriage returns. Select the Remove Line Feeds option from the Text Tools menu. Note that this function cannot be undone.

Select the cells for which you want the text to appear in all lower case. Select the Make All Lower Case option from the Text Tools menu. Note that this function cannot be undone.

Select the cells for which you want the text to appear in all upper case. Select the Make All Upper Case option from the Text Tools menu. Note that this function cannot be undone.

Select the cells for which you want the text to appear in normal case, meaning that only the first letter of the text will appear in upper case, while the remainder will appear in lower case. Select the Make Normal Case option from the Text Tools menu. Note that this function cannot be undone.

Select the cells for which you want the text to appear with each word having normal case. This means that the first letter of each word in the text will appear in upper case, while the remainder of these words will appear in lower case. Select the Make Every Word Normal Case option from the Text Tools menu. Note that this function cannot be undone.

3.5.7 MagIC Help Menu

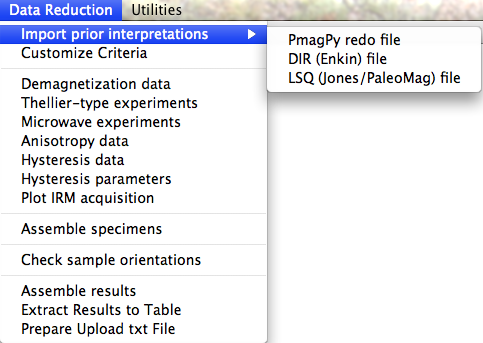

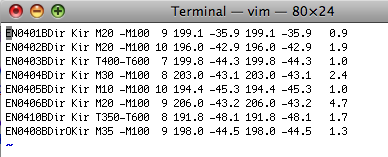

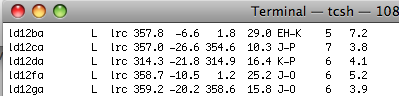

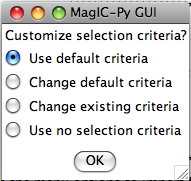

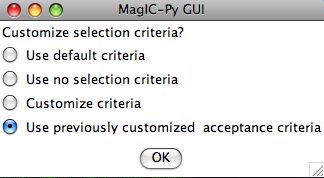

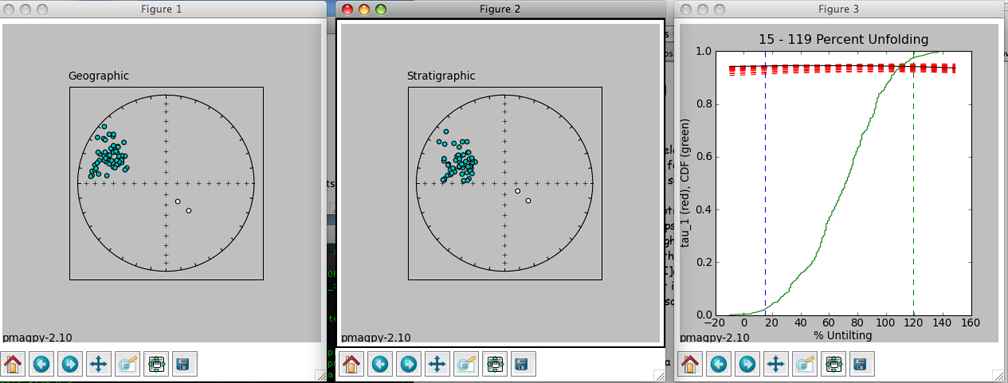

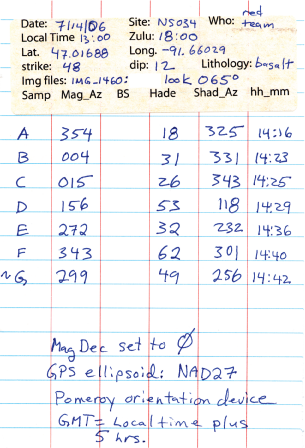

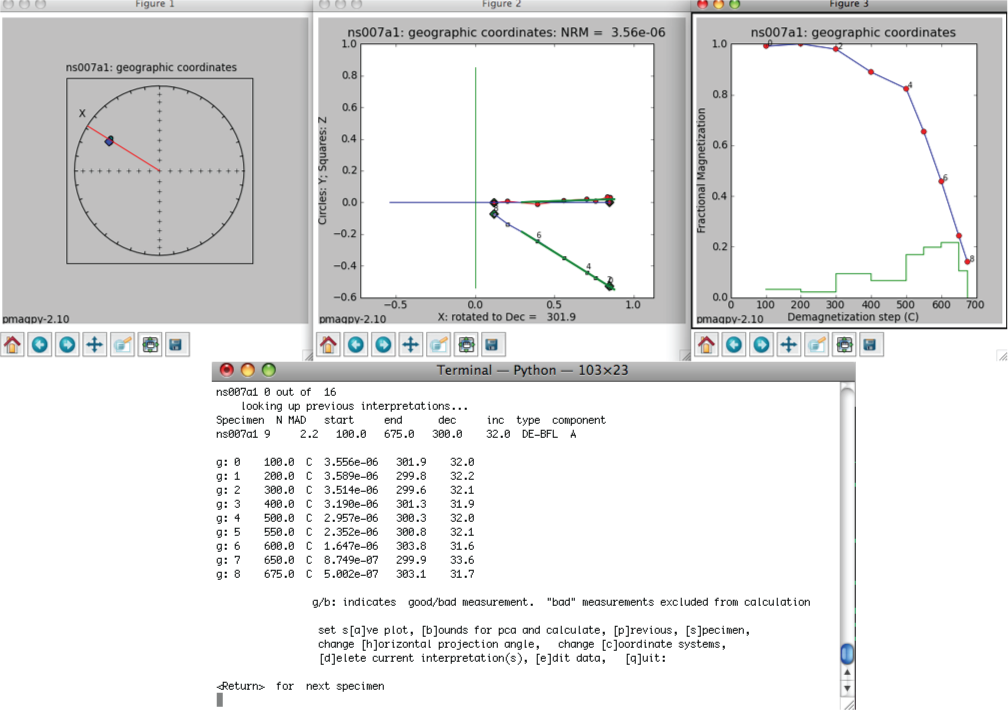

Select the Metadata and Data Model option from the MagIC Help menu to launch your browser and to review the current metadata and data definition. When entering this web page you will first see an overview of all Tables that define the MagIC SmartBook files. Click on a Table Name to view the definitions of the Records (columns) that define these tables, including short explanations and examples. Note that this function is equivalent to following the http://earthref.org/MAGIC/metadata.htm link. On this web form you can also do a free text search.